If (spark.sql(f"""SHOW TABLES IN ").collect() # Check if table exist otherwise create it In my case Hive database and table are called: # Hive variables The next stage is to check if Hive table already exists, otherwise create it. Lst = (spark.sql("SELECT FROM_unixtime(unix_timestamp(), 'dd/MM/yyyy HH:mm:ss.ss') ")).collect() It also shows when the job stared appName = "app1" Next start creating spark session and call the appName etc. The first line will import the usedFunctions as explained before, the second one will import all the needed variables and the third one all spark functions that are used in the python module. Start by importing what modules you need import usedFunctions as uf You can then import the spark functions as follows: from sparkutils import sparkstuff as sįirst start by creating a python file under src package called randomData.py Under packages folder, create a package called sparkutils, for spark specific stuff as shown below I call this folder packages (you create it as a standalone project called packages in P圜harm). You need to create a folder that can be shared by multiple P圜harm projects and put your spark stuff there. You can of course add your own functions there if you need to expand it Calling Spark functions Result_str = ''.join(chars for i in range(length)) Result_str = ''.join(random.choice(chars) for i in range(length-n)) + str(x) Return abs(random.randint(0, numRows) % numRows) * 1.0 Result_str = ''.join(random.choice(letters) for i in range(length)) Under src package, create a python file called usedFunctions.py and create your functions used for generating data there.

(".checks", "/apps/hive/warehouse"),Īssuming that you have your main Python modules under src package, you can import your variables in variables.py as import conf.variables as v Just put all your frequently accessed variable in that file # Hive variablesįullyQualifiedTableName = DB + '.' + tableName Under project directory in P圜harm, create a package called conf and within conf create a python file and call it variables.py. N.B You need to create a virtual environment for each python project in P圜harm Initialisation of variables Hive DW version 3.1.1 and Hadoop 3.1.1 on RHES 7.6.spark-3.0.1-bin-hadoop2.7 package used by P圜harm.P圜harm version 2020.3, Community edition.Anyway this is the gist of it, hopefully some will find it useful. One can optionally store data in a database of choice such as Hive. It is always good to use some numerical functions to create such random data. Later on, I developed the same generator for Scala. Years ago I developed such script for Oracle and adapted it for SAP ASE.

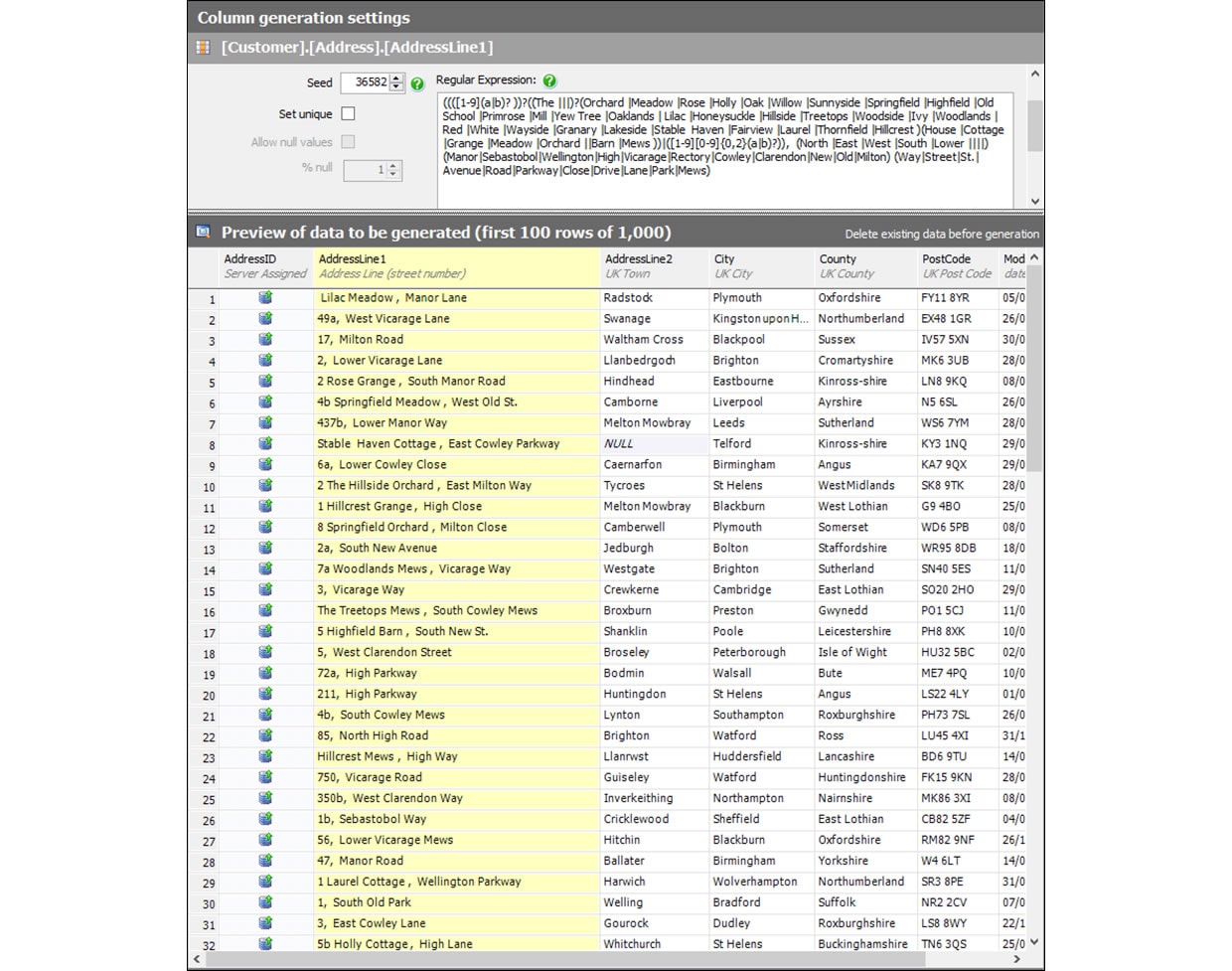

In one of my assignments, I was asked to provide a script to create random data in Spark/PySpark for stress testing.

0 kommentar(er)

0 kommentar(er)